Migrating an application from one cloud to another is a challenging activity and one must be mindful of both potential incompatibility and data loss when migrating. It is also, however, often necessary, so a proper way to automate the process and ensure a working deployment on the other end is certain to be a handy tool to an administrator. Since we have been working with multi and cross cloud environments and application portability (see paper and blog), we present a tool to automate this process for Openshift.

As far as use cases for migration go, the easiest example to visualize is moving an application from the development environment to production. Minishift, the single node local development version of Openshift is a great way to develop and test a new application, isolated from the risks and expenses of exposing it to the outside world. But at some point, this application will need to be recreated on a production Openshift instance and while doing this ‘traditionally’ is easy for small applications, it can become cumbersome for larger cases, especially if parts of it were configured using the graphical dashboard.

Other cases could potentially include moving from one production environment to another (eg. migrating across data centers) or even backing a production application up to the development environment.

Yet, even though this type of migration can be quite common, it is not adequately supported by the current implementation of the native tools. Even the export functionality of kubectl (and its Openshift counterpart, oc) has been found insufficient for creating a ready-to-import template, and now it is even being deprecated, basically leaving it up to third-party developers to come up with their own way of implementing import-export functionality for applications.

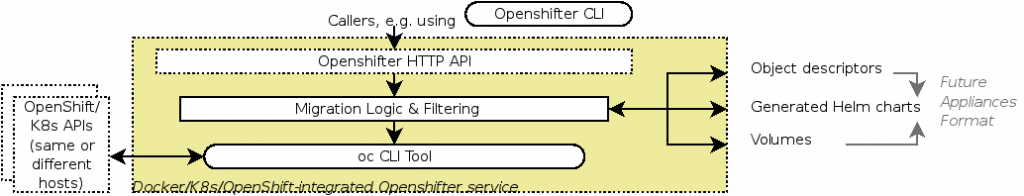

That’s where Openshifter comes in. The main idea and design goal here is to provide a way to extract a template of the existing application, with the added benefit of also extracting the data stored in persistent volumes, generate an enhanced helm chart or ‘fat chart’ (because it also contains the data from the volumes) and be able to then import it to a different Openshift instance, without worrying that the template will be incompatible.

The latter part is obviously the challenge here, as by default

oc get all -o jsonwill write cluster-specific information that will cause a simple

oc create -f <file>to fail. Hence, the json file exported needs to be filtered for specific prohibited fields and refactored to fit the target platform. Some of the fields need to be replaced and some modified, as removing them outright will remove too much information for the application to be recreated on the other side.

Openshifter is created to be a RESTful service, with all processing done on the server app, and requests done either by curl or, for some convenience, our interactive client.

Example

To better illustrate the operation of the tool, we’ll walk through an example migration, using both simple curl commands and the interactive client, for a simple application.

Setup

We will start with the setup. For this test we will look at a migration from Minishift to a production Openshift environment. The first step is to find our lab rat application. I usually choose the sample Django app from the default Openshift catalog. You can choose the variant with persistent storage to test the volume migration (assuming your environments support it). Now we just wait for it to finish deploying.

Migrating

The important information to know for both of our instances is:

- The base URL of the Openshift instance. For minishift this is <minishift_IP>:8443 but for other platforms it may not be that straightforward.

- The name of the namespace/project

- Username

- Password

For simple curl requests we will start with exporting from the source (assuming Openshifter is running on localhost) and packaging in a gzip archive:

curl https://localhost:8443/export/<baseURL>/<project>/<user>/<password> > _output

base64 -d < _output> _output.tgzNow we can delete the source application (optional, unless you want to import it back to the source):

curl https://localhost:8443/delete/<baseURL>/<project>/<user>/<password>And now we import to the target:

curl -X POST --data-urlencode @_output.tgz https://localhost:8443/import/<baseURL>/<project>/<user>/<password>And we are done! The application will now build and deploy on the target and will soon be online. Now let’s do it again with the interactive client:

When using the interactive client you can input the credentials manually when prompted or you can add them to input.json (as many sets as you want) and choose from them when running the client. The structure is like this:

{

"credentials": {

"some_instance": {

"base": "<some_URL>",

"project": "<project_name>",

"username": "<username>",

"password": "<password>"

},

"minishift": {

"base": "<some_URL>",

"project": "<project_name>",

"username": "<username>",

"password": "<password>"

}

}

}

Another interesting fact about the client is that it allows choosing the deletion policy:

- Move: Deletes the source application before importing. Useful when doing in-place migration testing, where you want to redeploy to the same Openshift instance.

- Ping-Pong: Deletes from the target before importing. This is useful if you are doing multiple round-trip migrations, as after it finishes you should have the application on both ends, so you still have the source application if something goes wrong.

- Copy: Deletes nothing. Just export and import.

Performance

So how does it perform? Well right now the biggest bottleneck in terms of execution time is waiting for the response to the delete command from the Openshift cluster, which could take anywhere between 5 and 30 seconds. If we omit deletion (run a copy migration) its just a matter of how long it will take to download and then upload the ‘fat chart’, depending on its size, and building it at the target. For our simple example within a minute of starting the process we already have our small app running normally on the target, and that includes exporting, deleting, refactoring, importing and building.

Lessons Learnt & Code

Though still not perfect and with a lot of room for additions and integration, Openshifter is a tool borne of necessity: Application migration is a process that has to be performed, and current tools don’t support it properly.

Thus our contribution is a way to automate the process of refactoring an extracted application configuration (with its data), a non-trivial task and one that Kubernetes does not seem to have in its current plans to address, since they are deprecating the export functionality of their API. This, integrated into an automatic import-export tool to migrate between Openshift instances, is a first step in the necessary third-party implementation of Kubernetes migrations.

You can find the current Openshifter prototype implementation in its Git repository.